1. Adjoint estimation: low or high order?

Adjoint methods have been systematically associated to the optimal control design [5] and their applications to aerodynamics [1, 4]. During the last decades, several works were oriented to develop a robust control theory based on the concepts of observability, optimality and controllability for linear and non-linear equations and systems of equations [2, 10, 11, 12].

Since the discrete approach forces to use the discrete adjoint problem of the flow solver to numerically solve the adjoint equation, the continuous approach is adopted due to its valuable flexibility of using different solvers for the flow and adjoint equations. It is well known that problems where high frequencies play an important role such as the control of wave equation [11]

The focus is put on the 2D linear scalar transport equation, that can be expressed in a conservative form as follows:

\begin{equation}

\dfrac{\partial u(x,y,t)}{\partial t} + \nabla \cdot (\mathbf{v} u) = 0 \qquad u(x,y,0)=u_0\label{generalEqIntro}

\end{equation}

where $\mathbf{v}=v(x,y)$ is a time-independent velocity field of propagation.

Given a target function $u^*=u^*(x,y)$, the aim in the 2D inverse design problem consists in finding $u_0$ such that $u(T) \sim u^*$ via the minimization of a functional $J$:

\begin{equation}

J(u)=\dfrac{1}{2} |u(T)-u^*|^2

\end{equation}

This problem can be easily addressed by simply solving the transport equation backwards in time because of the time reversibility of the model. But such a simple approach fails as soon as the model involves nonlinearities (leading to shock discontinuities) or diffusive terms, making the system time-irreversible.

We are thus interested in the development of gradient descent methods with the aid of the adjoint equation (solved backwards in time) that can be easily deduced

\begin{equation}

- \dfrac{\partial \sigma(x,y,t)}{\partial t} - \mathbf{v} \cdot \nabla \sigma = 0, \qquad \sigma(T)= u(T)- u^*

\label{generalAdjIntro}

\end{equation}

where $\sigma=\sigma(x,y,t)$ is the adjoint variable.

In order to achieve a good match with the continuous solutions, high order numerical schemes need to be used for the forward state equation (\ref{generalEqIntro}). Here we shall use a second order scheme. Our main objective is to test the convenience of using the same order of accuracy when solving the adjoint equation or, by the contrary, to employ a low order one. When implementing the gradient descent iterations, the numerical scheme employed for solving the adjoint equation determines the direction of descent. Hence, different solvers for the adjoint system provide different results that can be compared in terms of accuracy and efficiency.

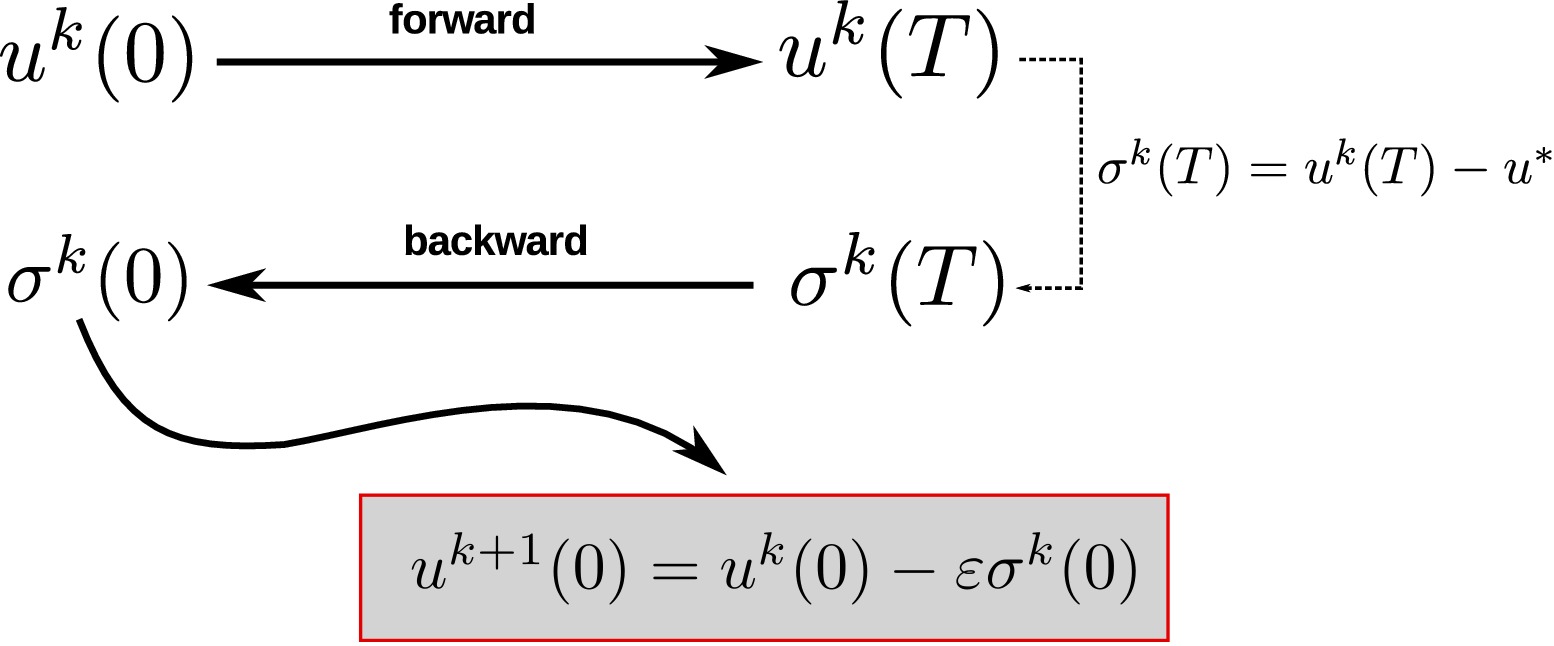

A gradient-adjoint iterative method is based on iterating a loop where the equation of state (flow equation) is solved in a forward sense while the adjoint equation, which is of hyperbolic nature as well, is solved backwards in time (see Figure 1).

The adequate resolution of this loop is the main question addressed in this work, paying attention not only to accuracy but also to reducing its computational complexity. One could expect that the choice of high order numerical methods to solve both the equation of state and the adjoint one should provide the best results in terms of accuracy, at the prize of a high computational cost. But this is not always true and it is possible to relax the necessity of using the same order of accuracy for the resolution of the adjoint equation, not only reducing considerably the computational time needed by the complete loop but also achieving the same order of accuracy on the approximation of the inverse design, which is our ultimate goal.

2. 2D linear equation and continuous adjoint derivation

We focus on the following conservative linear transport scalar equation with an heterogeneous time-independent vector field $\textbf{v} = \textbf{v} (x,y):$

\begin{equation}

\dfrac{\partial u}{\partial t} + \nabla \cdot (\mathbf{v} u) = 0 .

\label{generalEq}

\end{equation}

Obviously, the solution $u=u(x, y, t)$ exists and it is unique and can be determined by means of the method of characteristics provided $\textbf{v}$ is smooth enough (say, $C^1$). Thus, for all initial data $u_0 \in L^2(\mathbb{R}^2)$ there exists an unique solution in the class $C([0, T]; L^2(\mathbb{R}^2))$.

Let us consider the inverse design problem: Given a target function $u^* = u^*(x,y)$ at $t=T$, to determine the initial condition $u_0$ such that $u(x, y, T) \equiv u^*(x,y)$.

The problem could be easily addressed solving the equation (\ref{generalEq}) backwards in time from the final datum $u^*$ at $t=T$, to determine $u_0=u(x, y, 0)$ exactly. But we are interested in addressing it from the point of view of optimal control. Let us therefore define the following functional, as a classical measure of the quadratic error with respect to the target function $u^*$:

\begin{equation}

J(u)=\dfrac{1}{2} \int_{\Omega} \left(u(T)-u^* \right)^2 dS

\label{functional}

\end{equation}

The derivation of the adjoint equation can be achieved by simply multiplying by $\sigma=\sigma(x,y,t)$ and integrating over a control volume $\Omega \times [0,T]$

\begin{equation}

\int_0^T \int_{\Omega} \sigma \left(\dfrac{\partial u}{\partial t} + \nabla \cdot (\textbf{v}u) \right) dS dt=0

\end{equation}

Integrating by parts

\begin{equation}

\int_{\Omega} \sigma u \vert_0^T dS - \int_0^T \int_{\Omega} u \dfrac{\partial \sigma}{\partial t} dS dt +

\int_0^T \oint_{\partial \Omega} u \sigma \textbf{v} \mathbf{n} dl dt - \int_0^T \int_{\Omega} u \textbf{v} \nabla \sigma dS dt=0

\end{equation}

where $\partial \Omega$ is the boundary and $\mathbf{n}$ is the outward normal direction to the surface $\Omega$ respectively.

It is feasible to take Gateaux derivatives with respect to the variable $u$ in this identity getting:

\begin{equation}

\int_{\Omega} \sigma \delta u \vert_0^T dS - \int_0^T \int_{\Omega} \delta u \dfrac{\partial \sigma}{\partial t} dS dt +

\int_0^T \oint_{\partial \Omega} \delta u \sigma \textbf{v} \mathbf{n} dl dt - \int_0^T \int_{\Omega} \delta u \textbf{v} \nabla \sigma dS dt=0

\label{variational1}

\end{equation}

Gathering appropriately the terms:

\begin{equation}

\int_0^T \int_{\Omega} \delta u \overbrace{\left( -\dfrac{\partial \sigma}{\partial t} - \textbf{v} \cdot \nabla \sigma \right)}^{(\sharp)} dS dt + \int_{\Omega} \sigma \delta u \vert_0^T dS + \overbrace{

\int_0^T \oint_{\partial \Omega} \delta u \sigma \textbf{v} \mathbf{n} dl dt}^{(\sharp \sharp)} = 0

\label{variational2}

\end{equation}

Let us select $(\sharp) = 0$

\begin{equation}

-\dfrac{\partial \sigma}{\partial t} - \textbf{v} \cdot \nabla \sigma =0

\label{adjoint}

\end{equation}

and appropriate boundary conditions for $(\sharp \sharp)$ = 0. Hence (9) becomes

Assuming $\sigma(T) = u(T)-u^*$

\begin{equation}

\int_{\Omega} (u(T)-u^*) \delta u (T) dS = \int_{\Omega} \sigma(0) \delta u(0) dS

\label{variational4}

\end{equation}

On the other hand, it is also feasible to take the first variation of $J$ with respect to $u$ in (\ref{functional})

\begin{equation}

\delta J(u)=\int_{\Omega} \delta u (T) \left(u(T)-u^* \right) dS

\label{deltaFunctional}

\end{equation}

Finally,

\begin{equation}

\delta J(u) = \int_{\Omega} (u(T)-u^*) \delta u (T) dS = \int_{\Omega} \sigma(0) \delta u(0) dS

\label{variational5}

\end{equation}

This derivation led to the adjoint equation $(\sharp)$:

\begin{equation}

-\dfrac{\partial \sigma}{\partial t} - \textbf{v} \cdot \nabla \sigma =0 \qquad \sigma(T) = u(T)-u^*,

\label{adjoint}

\end{equation}

$ \sigma =\sigma(x,y,t)$ being the adjoint variable.

This equation measures the sensitivity of the solution to changes in the initial condition. It is worth mentioning that the adjoint equation is solved backwards in time (from $t=T$ to $t=0$). In fact, system (\ref{adjoint}) is well-posed if and only if the original system is well posed in the forward sense.

3. Numerical schemes

In order to obtain a numerical solution based on a finite volume approach, (\ref{generalEq}) is integrated in a control volume $\Omega_i \times [t^n,t^{n+1}]$ where $\Omega_i$ represents each computational cell of the domain and $t^{n+1}=t^n+\Delta t$

\begin{equation}

\int_{t^n}^{t^{n+1}} \int_{\Omega_i} \left(\dfrac{\partial u}{\partial t} + \nabla \cdot (\textbf{v} u) \right) dS dt= 0

\end{equation}

Using the Gauss divergence theorem

\begin{equation}

\int_{\Omega_i} u(x,y,t^{n+1})dS - \int_{\Omega_i} u(x,y,t^{n})dS + \int_{t^n}^{t^{n+1}} \oint_{\partial \Omega_i} \textbf{f} \textbf{n} \; dm \; dt= 0

\label{FV_eq}

\end{equation}

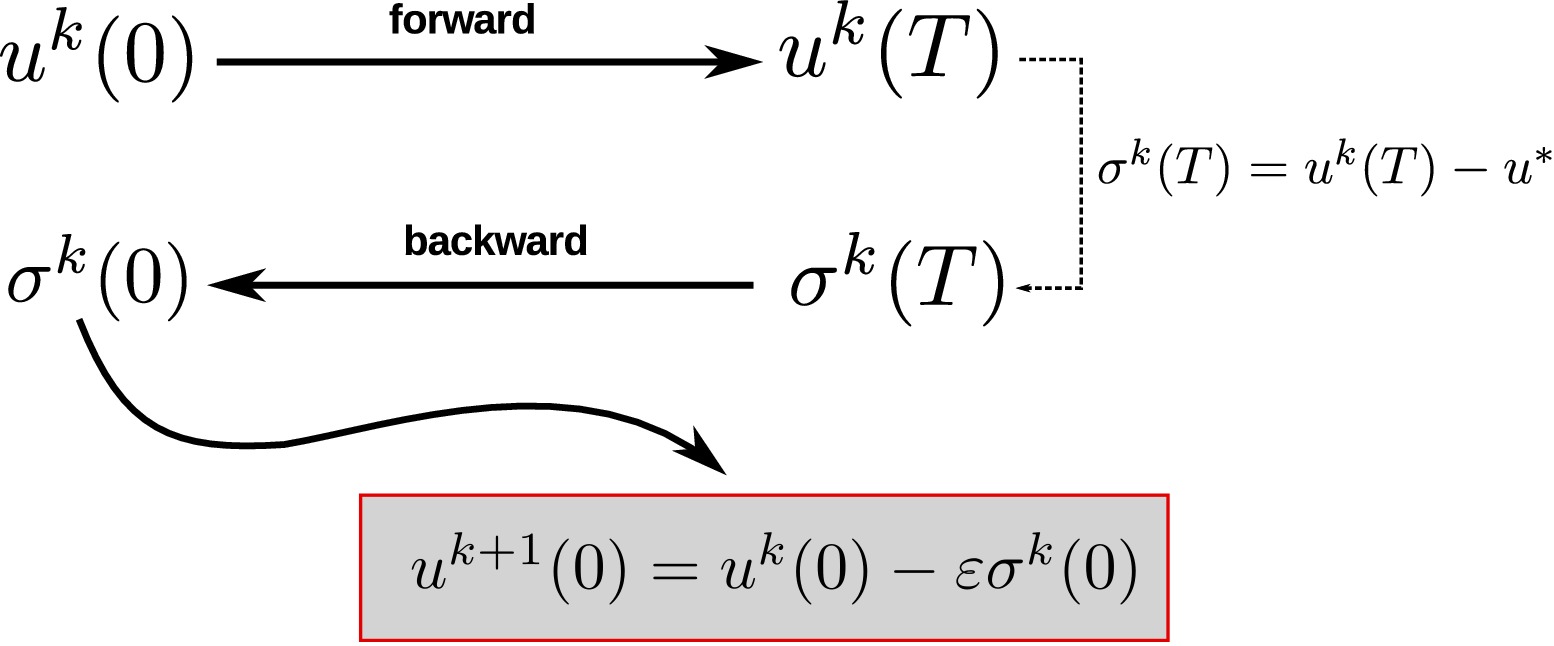

where $\textbf{f} = \textbf{v} u$ is the flux, $\partial \Omega_i $ denotes the surface surrounding the volume $\Omega_i$ and $\textbf{n}=(n_x,n_y)$ its unit normal vector. The last contour integral in (\ref{FV_eq}) can be replaced by the sum of the fluxes defined at each edge $k$ of length $l_k$:

\begin{equation}

\int_{\Omega_i} u(x,y,t^{n+1})dS - \int_{\Omega_i} u(x,y,t^{n})dS + \int_{t^n}^{t^{n+1}} \sum\limits_{k=1}^{N_E} \textbf{f}_k \textbf{n}_k l_k dt= 0

\label{FV_eq2}

\end{equation}

with $N_E$ is the number of edges of each cell $i$ ($N_E=3$ is for triangular grids) with neighbouring cells $j_k$. Figure 2 clarifies the meaning of each variable.

In this work two numerical schemes are proposed: a first order upwind (FOU) scheme and a second order upwind (SOU) scheme based on MUSCL-Hancock approach. The details of the implementation can be found in [7] and [6, 9] respectively. The use of a SOU scheme for the flow equation (forward) is mandatory in order to achieve the best accuracy since the FOU scheme is unable to capture the detail for the equation of state when trying to reach the target function. The issue of using one or another approach for solving the adjoint equation and, consequently, the gradient direction approach, is worth analysing.

4. Test case: Doswell frontogenesis

This test case was firstly proposed (in a forward sense) in [3]. It symbolizes the presence of horizontal temperature gradients and fronts in the context of meteorological dynamics. The original problem consists of a computational domain $\Omega=[-5,5]$ with open boundary conditions in which a front defined by the following initial condition:

\begin{equation}

u(x,y,0)=\tanh\left(\dfrac{y}{\delta}\right)

\label{tc2Exact}

\end{equation}

is advected under the velocity field given by

It is important to mention that the velocity field is divergence-free. The state equation can the be written similarly in divergence or non-divergence form:

\begin{equation}

\dfrac{\partial u}{\partial t} + \nabla \cdot (\mathbf{v} u) = \dfrac{\partial u}{\partial t} + (\nabla \cdot \mathbf{v}) u=0,

\end{equation}

because

$$

\nabla \cdot \mathbf{v} \equiv 0,

$$

This allows us to formulate the problem of inverse design. Given the exact value of the solution at time $t=T$, i.e. giving (\ref{tc2Initial}) as a target function at $t=T$, we aim to recover by a computational version of the gradient-adjoint methodology described above, an accurate and efficient approximation of the initial datum (\ref{tc2Exact}).

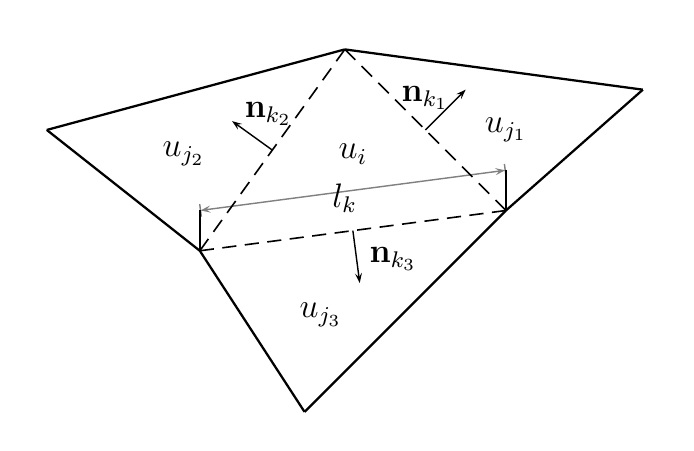

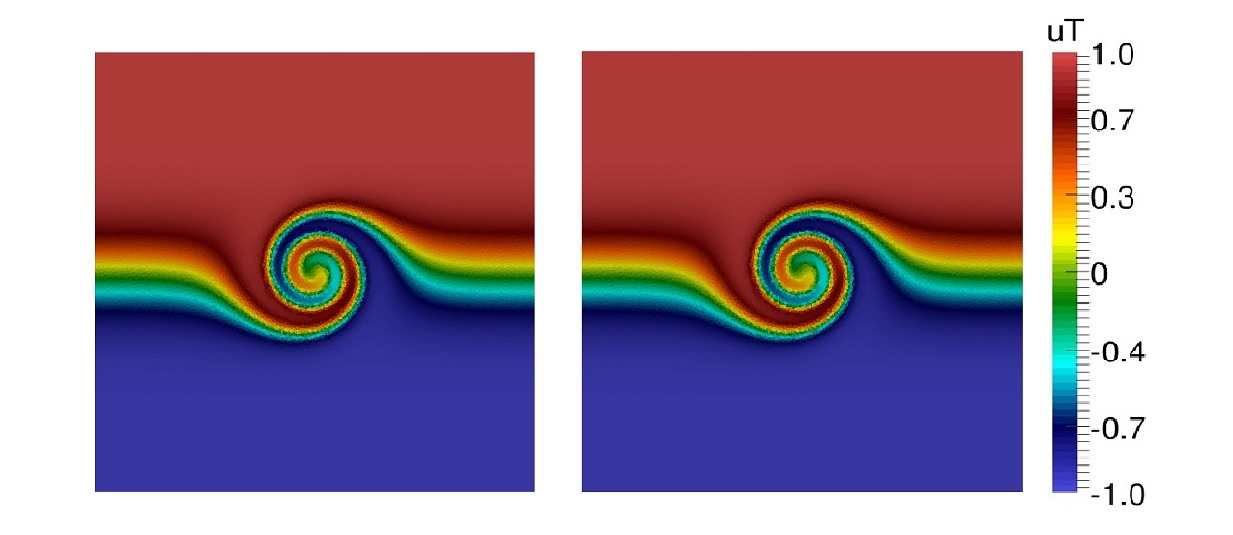

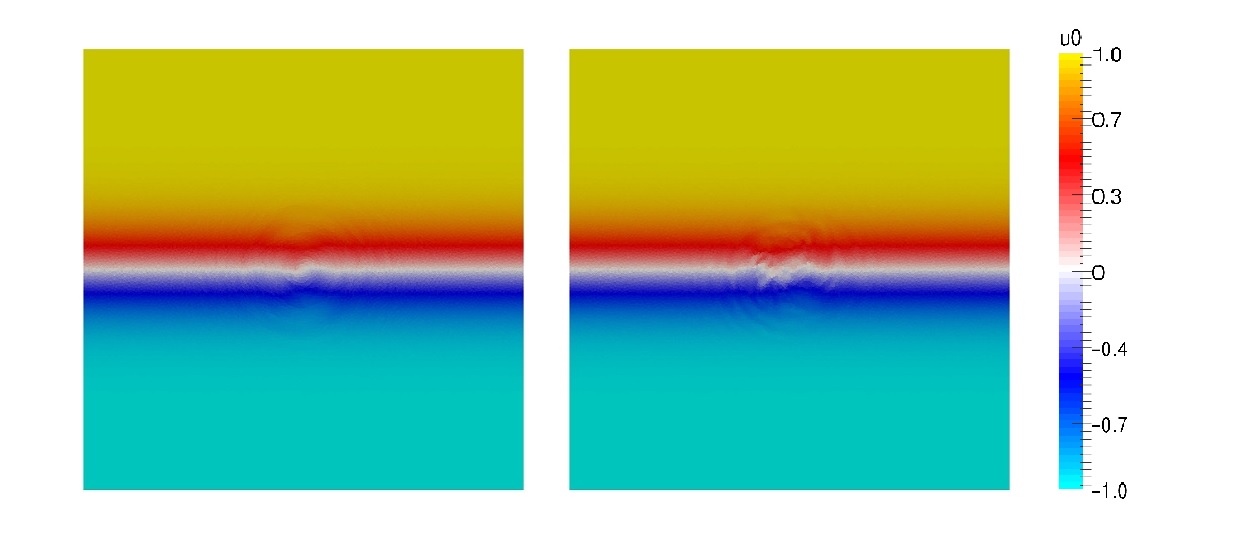

In our numerical experiments we take the values $\delta=1$ and $T=4s$. The exact initial condition and the exact target are shown in Figure 3 (left and right respectively). As can be seen, the target presents a vortex-type profile, due to the heterogeneous geometry of the velocity field.

Our goal is to build a numerical method capable of predicting accurately and efficiently an approximation of (\ref{tc2Exact}) out of the target (\ref{tc2Initial}) by means of a numerical version of the optimisation algorithm above.

The domain is discretized in 39998 unstructured triangles using Triangle [8] in a uniform Delaunay mesh. The initial condition for the first iteration is $u^0(x,y,0)=0$. Both the forward and backward resolution are parallelized with OPENMP in 4 cores. The maximum number of iterations for the gradient method is set to 75, a constant step $\varepsilon=0.5$ is selected and the Courant number is set to $CFL=0.5$.

Additionally, the step size $\varepsilon$ is set as a constant at the beginning of each iteration. However, if the convergence is not guaranteed, i.e., $J^{k+1}>=J^k $, the step size is halved until $J^{k+1}<J^k $. Therefore, the stopping criteria for the iterative algorithm are:

- To reach the maximum number of iterations

- To achieve the maximum allowable error of the functional, in this case, $10^{-9}$

- To descend below $\varepsilon_{min} = 10^{-6}$ when halving the step size $\varepsilon$ trying to achieve convergence.

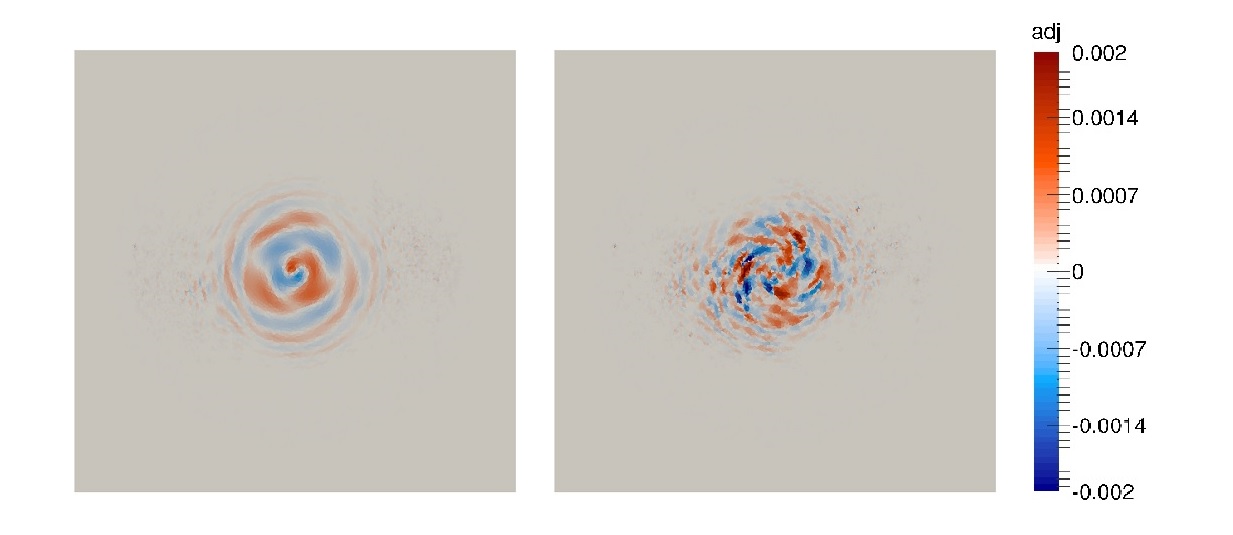

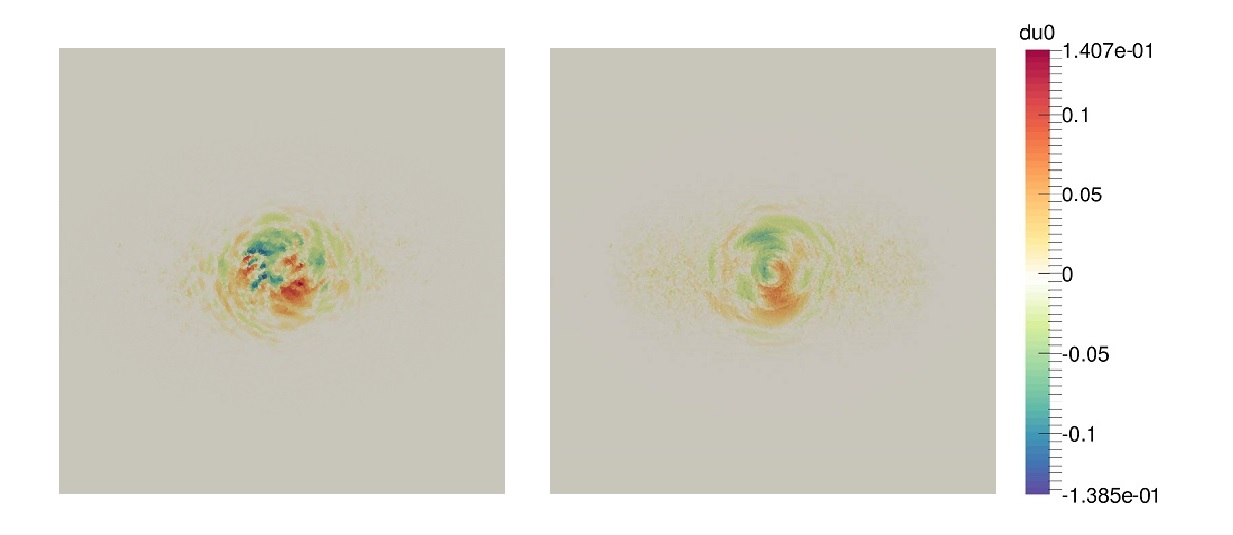

The numerical results are shown in Figures 4, 5 and 6 for the target $u(x,y,T)$, initial condition $u(x,y,0)$ and adjoint $\sigma (x,y,0)$ respectively at the last iteration of the gradient method. On the left side the $\nabla J^{FOU}$ approach is displayed while the $\nabla J^{SOU}$ resolution is illustrated on the right side.

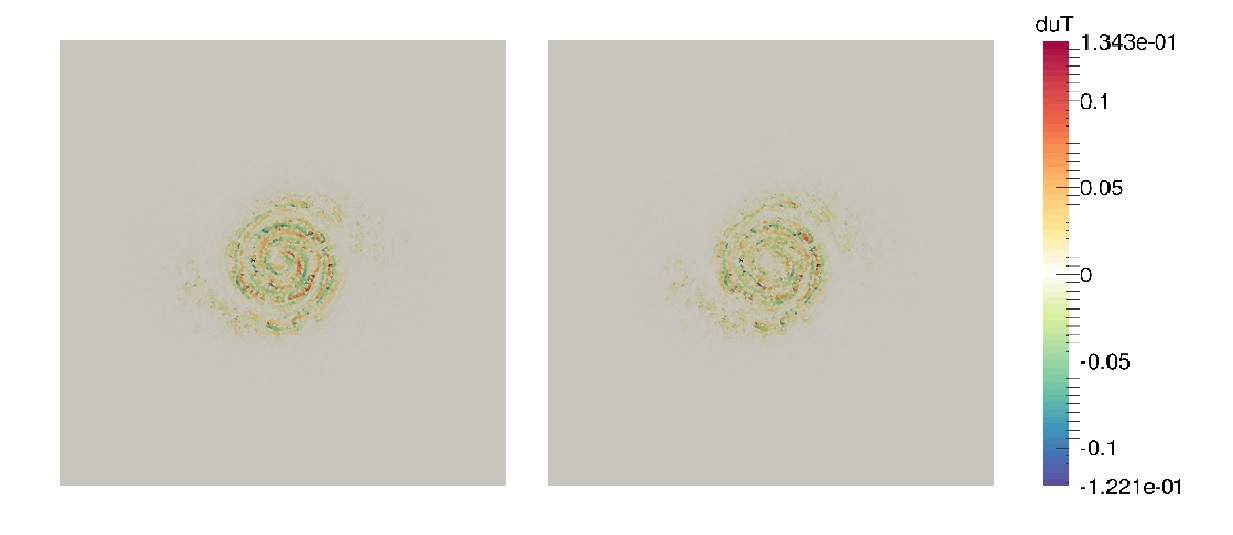

In order to complete the qualitative results, the error computed as the difference between the exact and the numerical approximation is displayed in Figures 7 and 8 for the target $u(x,y,T) – u^*(x,y)$ and the initial datum $u(x,y,0) – u^e(x,y)$.

Quantitative results can be obtained by means of the computation of $L_2$ and $L_{\infty}$ norms, not only with respect to the target function but also to the known exact initial condition $u^e$ in order to evaluate the results achieved by $\nabla J^{FOU}$ and $\nabla J^{SOU}$:

\begin{equation}

\begin{split}

L_2 (u^T) = \sqrt{\sum_i^N (u(x_i,T)-u^*(x_i))^2} \qquad L_{\infty} (u^T) = \max_i |u(x_i,T)-u^*(x_i)| \\

L_2 (u^0) = \sqrt{\sum_i^N (u(x_i,0)-u^e(x_i))^2} \qquad L_{\infty} (u^0) = \max_i |u(x_i,0)-u^e(x_i)|

\end{split}

\label{normsDefinition}

\end{equation}

Table 1 condenses this information as well as the number of iterations and the CPU time for each proposed scheme. For the sake of clarity and for each comparison, the best results are highlighted in bold font.

| Scheme | $L_2$ ($u^T$) | $L_\infty$ ($u^T$) | $L_2$ ($u^0$) | $L_\infty$ ($u^0$) | nIters | CPU Time (s) |

|---|---|---|---|---|---|---|

| FOU | 1.37127e+00 | 1.37078e-01 | 1.36043e+00 | 8.64870e-02 | 75 | 366.26 |

| SOU | 1.23889e+00 | 1.34271e-01 | 1.88749e+00 | 1.40718e-01 | 45 | 488.61 |